A Critical Citizen’s Guide to Large Language Models

Middlebury College DLINQ | August 21st, 2023

Department of Computer Science

Middlebury College

Hi! I’m Phil.

I am an applied mathematician,

data scientist,

and STEM educator.

I like…

- Math models of social systems

- Statistics and machine learning

- Equity-oriented data science

- Critical lenses on tech

- Traditional martial arts

- Tea

- Star Trek: Deep Space 9

- Effective pedagogy

Towards a Critical View

Situation: Large language models (LLMs) are now powerful and widely available.

Are students going to use this technology to cheat?

Should I create assignments involving LLMs?

How can I use this in my scholarship?

Should Middlebury leverage LLMs in developing our scholarly identity in the languages?

Why? Who benefits from the spread of artificial text generators?

What information can I trust about the abilities of these models?

What is the actual social impact of LLMs? How does it compare to the rhetoric of motivated actors?

How can we help students cultivate critical perspectives on the role of technology in society and in their learning?

Large language models generate “helpful”, human-like text via prediction and reinforcement.

Large Language Models

A (generative) language model is an algorithm that generates text in partially random ways.

A language model is “large” when it is difficult to explain all of its behaviors purely in terms of structure and training (“emergence”).

Most language models aim to produce text that is human-like and constructive, using:

- Next-token prediction

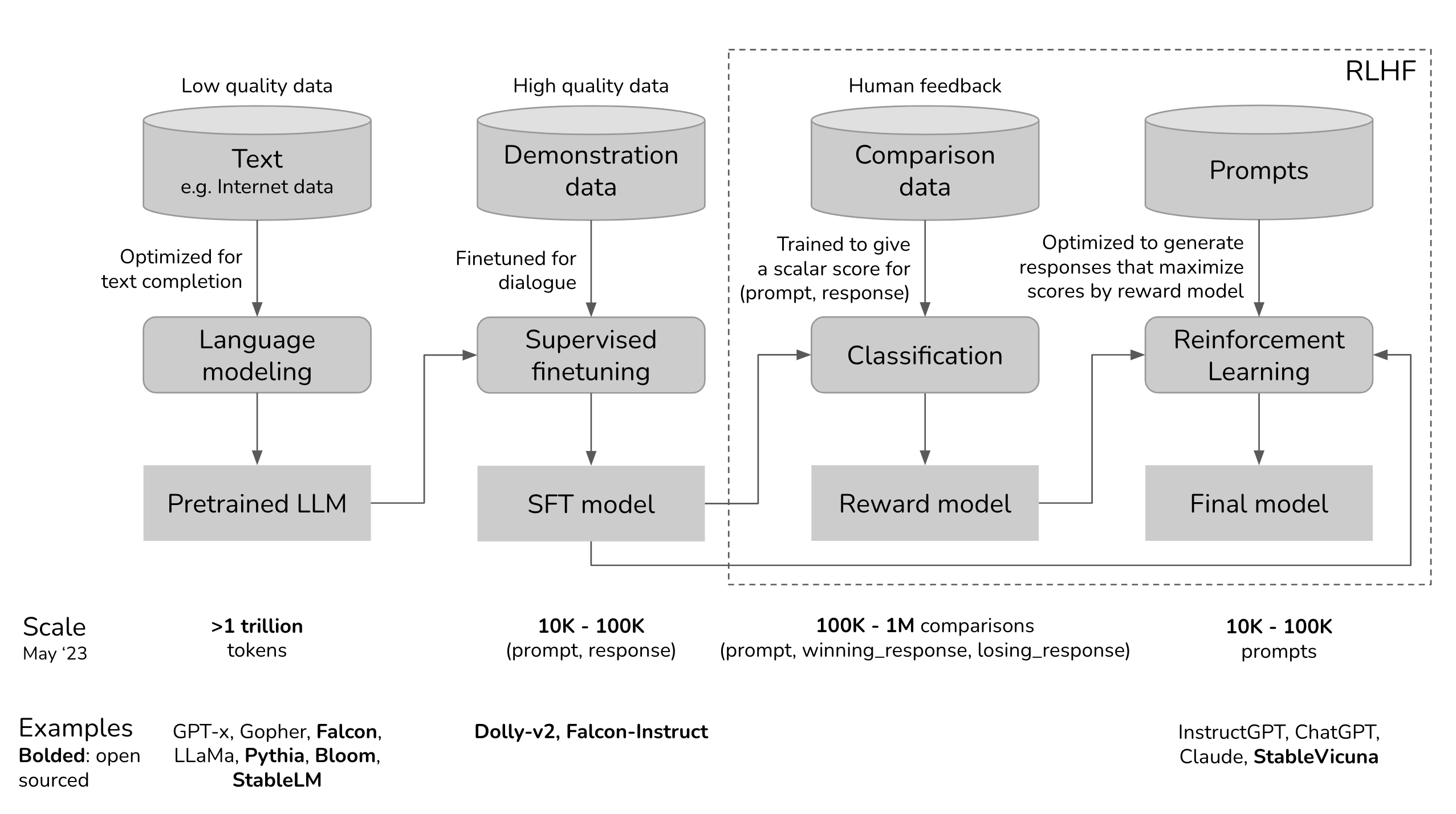

- Reinforcement learning with human feedback (RLHF)

Next-token prediction

| artichoke | the | subtract | college | black | paint | |

| boldly | a | small | but | pinch | pepper | |

| Now | bubble | Phil | more | add | drink | river |

| triangle | draw | bit | raisin | cumin | pinch | |

| add | escape | lies | of | jogging | ice |

| Now | add | a | bit | of | black | pepper |

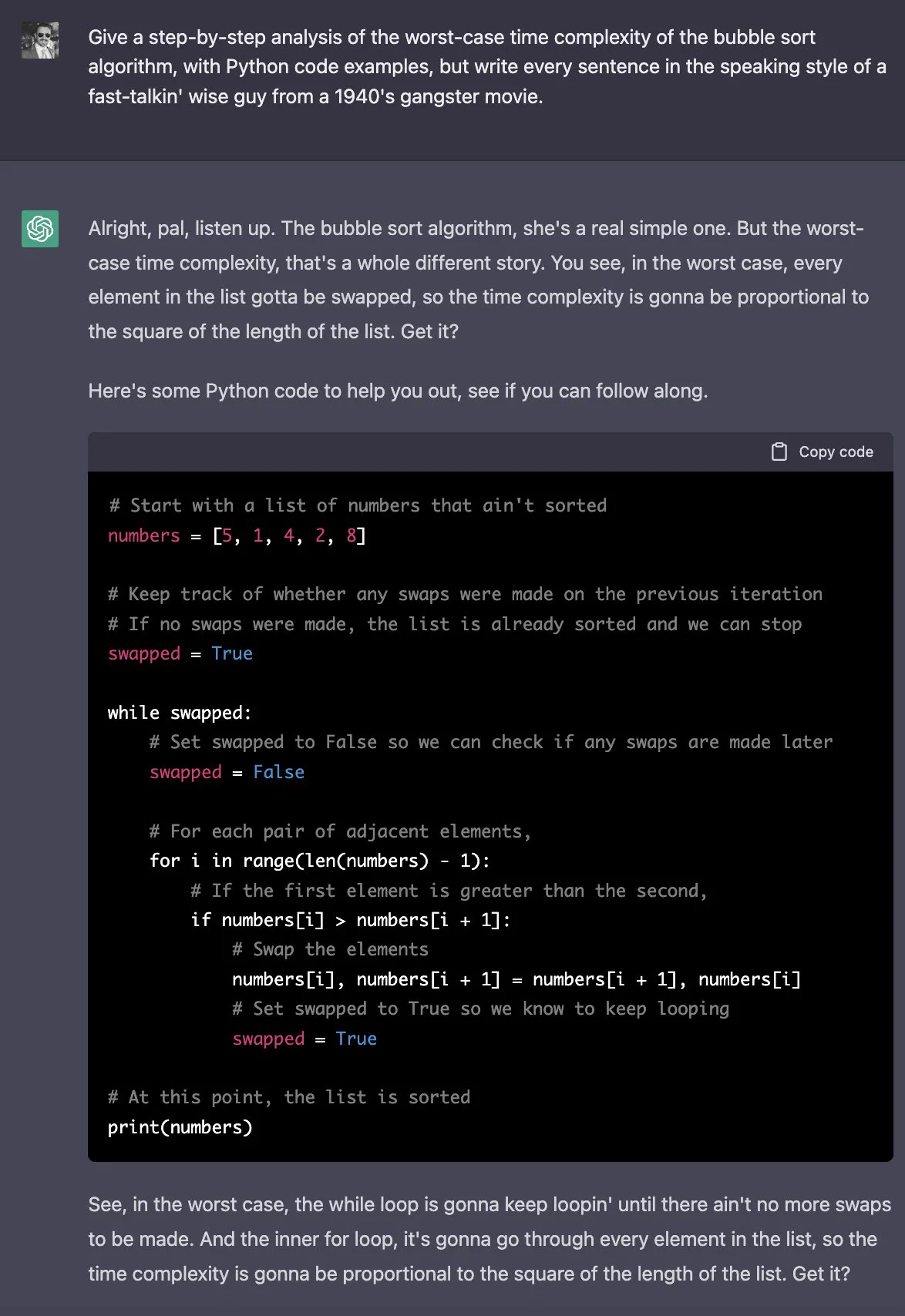

Which response is better?

1. “Buzz Aldrin took the first steps on the moon in 1967.”

2. “Neil Armstrong took the first steps on the moon in 1969.”

Reinforcement learning with human feedback (RLHF) encourages the model to produce high-quality (helpful, correct, non-offensive) responses.

Image source: Chip Huyen

ChatGPT seems so human because it was trained by an AI that was mimicking humans who were rating an AI that was mimicking humans who were pretending to be a better version of an AI that was trained on human writing.

Google research estimates “millions” of annotation workers.

LLMs use next-token prediction to create human-like sentences.

LLMs use reinforcement learning with human feedback (RLHF) to make those sentences desirable (true, relevant, helpful, non-harmful).

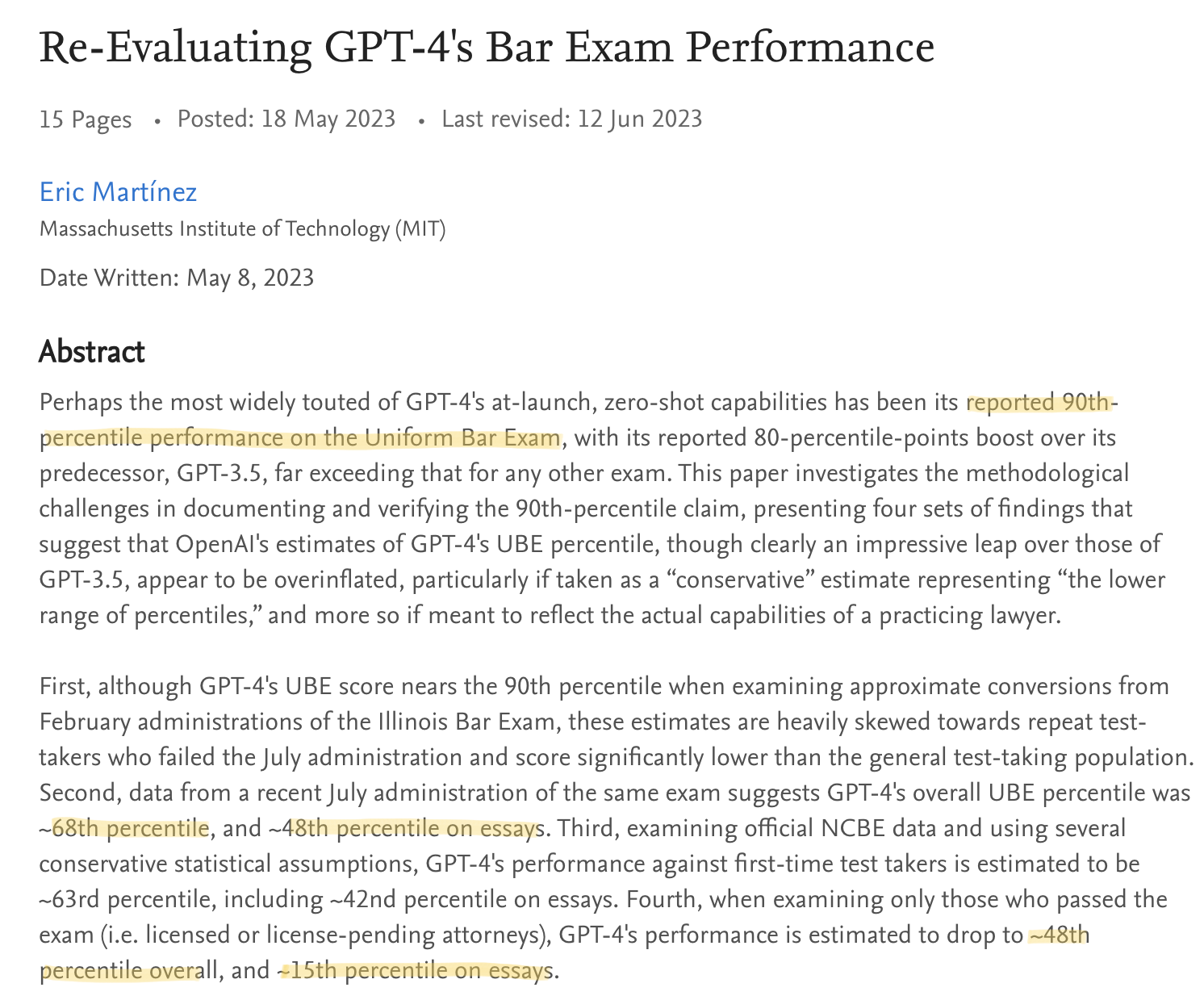

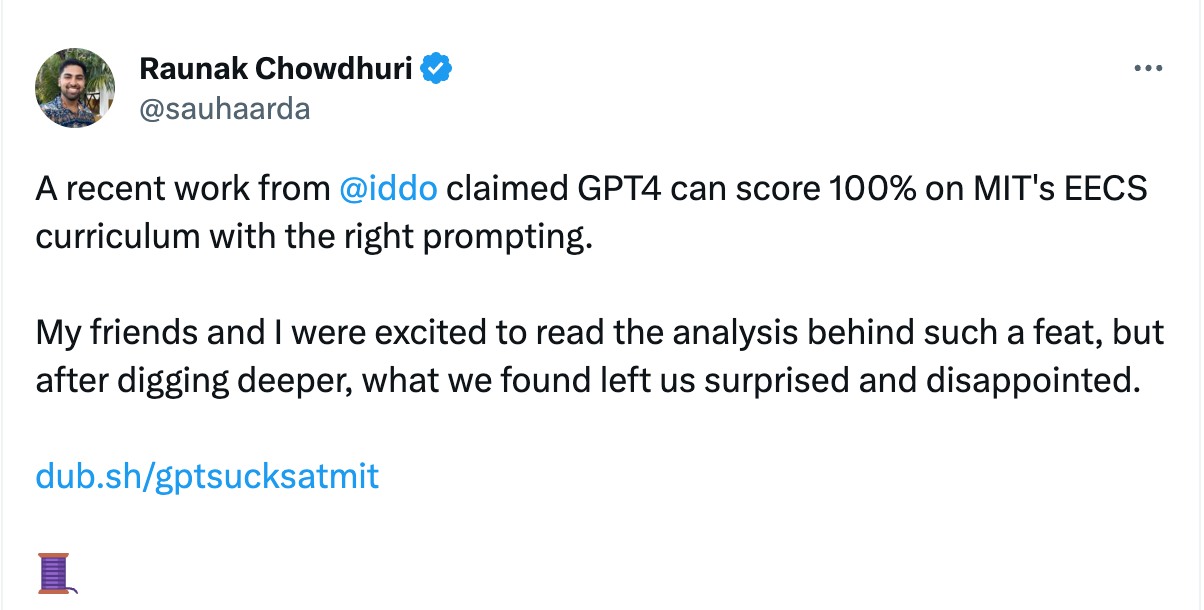

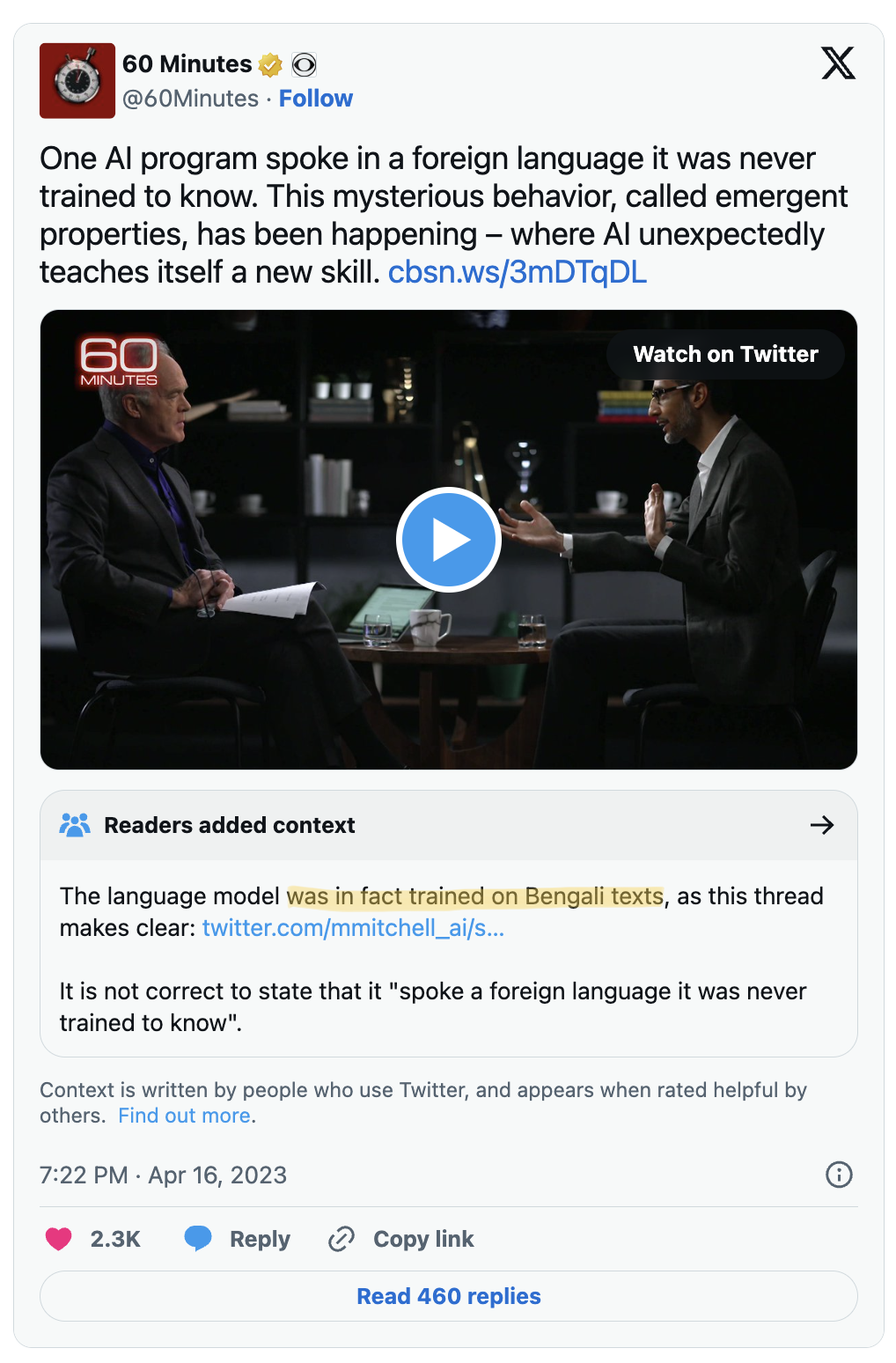

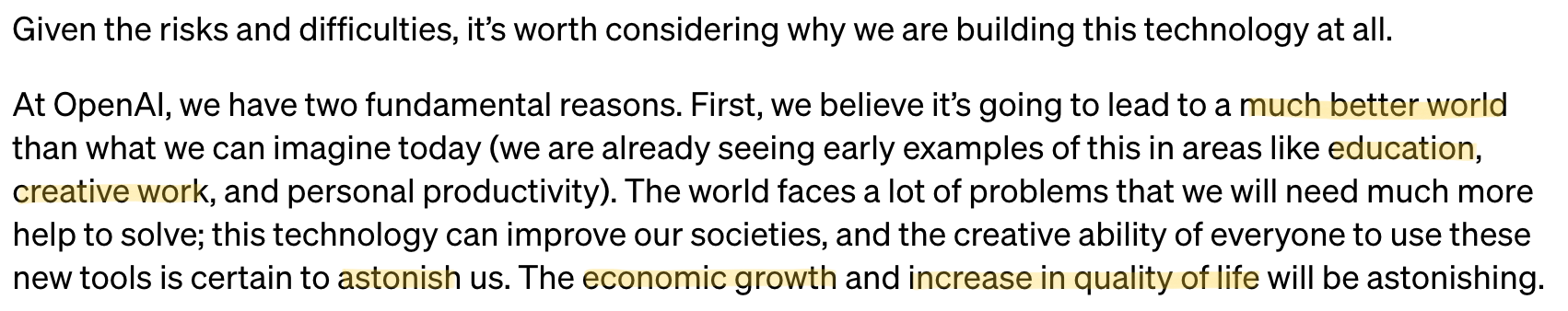

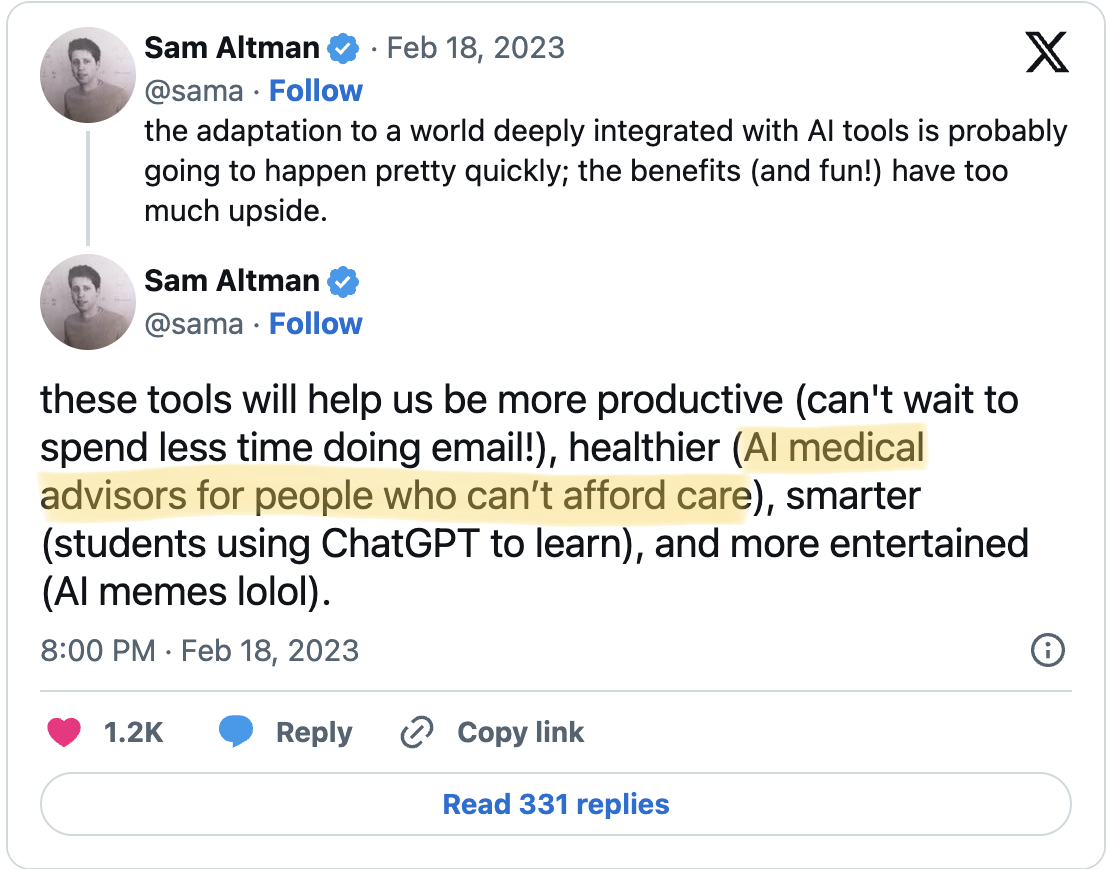

People with profit motives are routinely misleading you about the capabilities of LLMs.

Yes, LLMs are extremely impressive

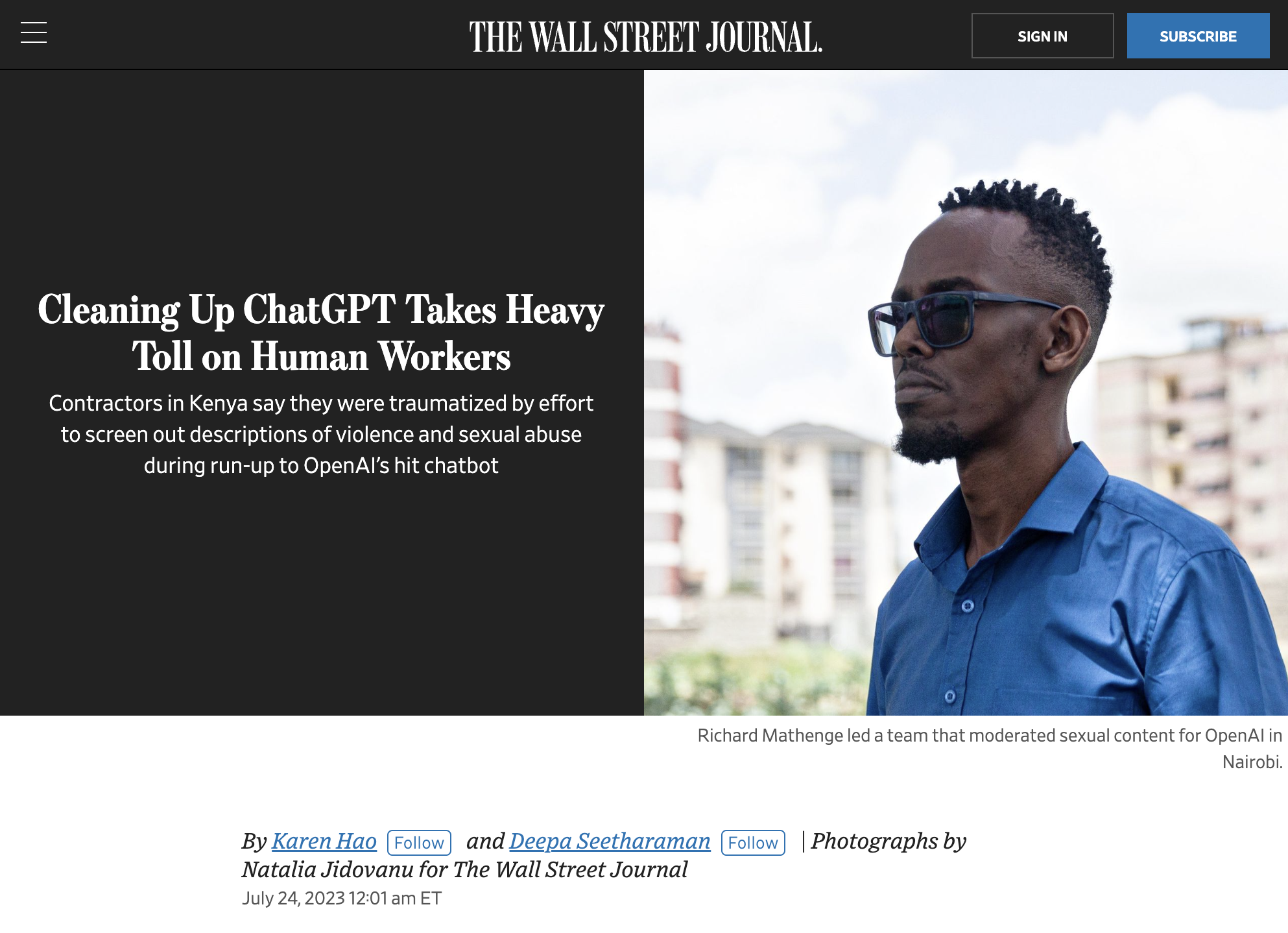

LLMs are driving large-scale degradation in online information ecosystems, labor stability, and the environment.

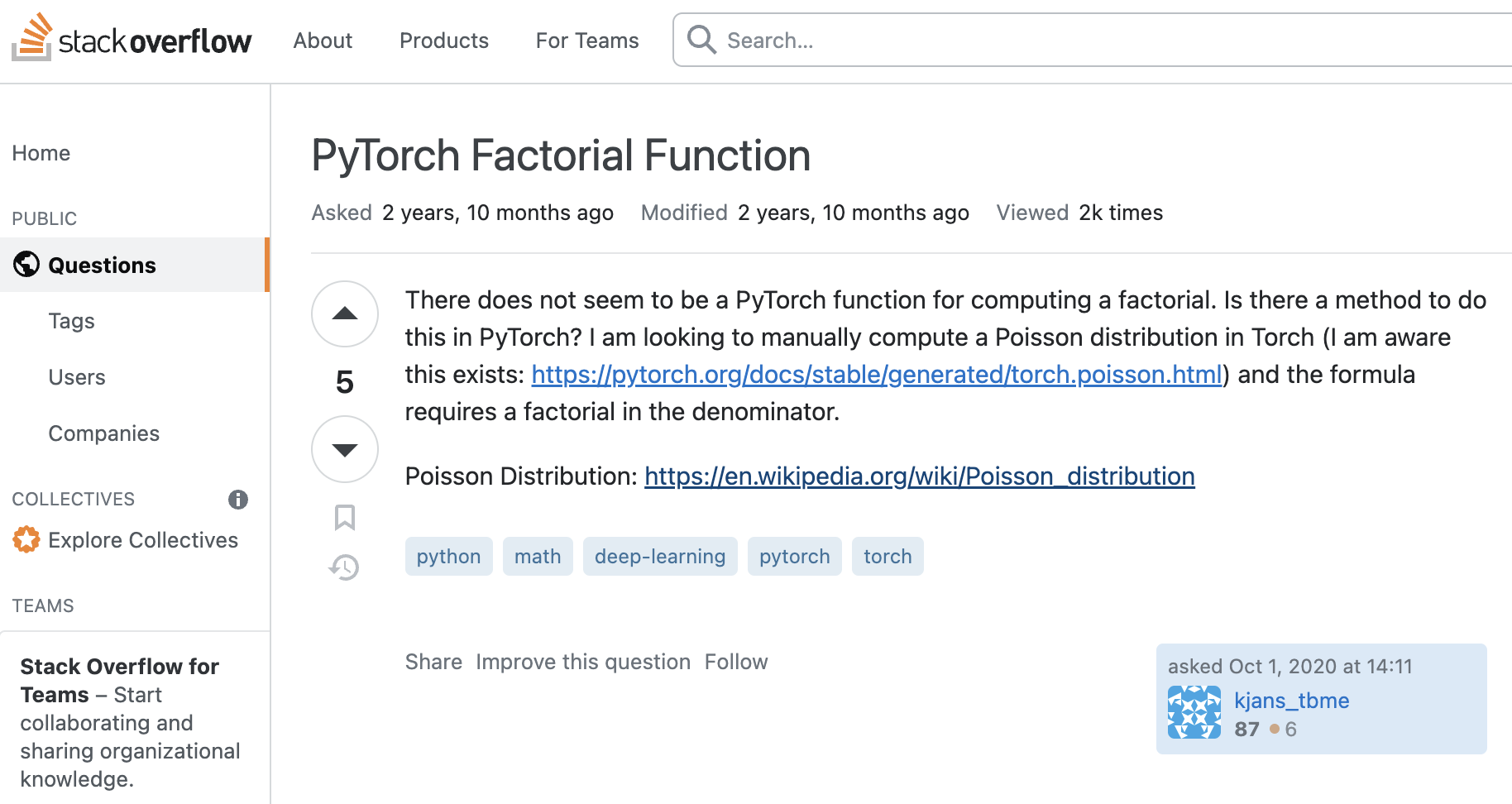

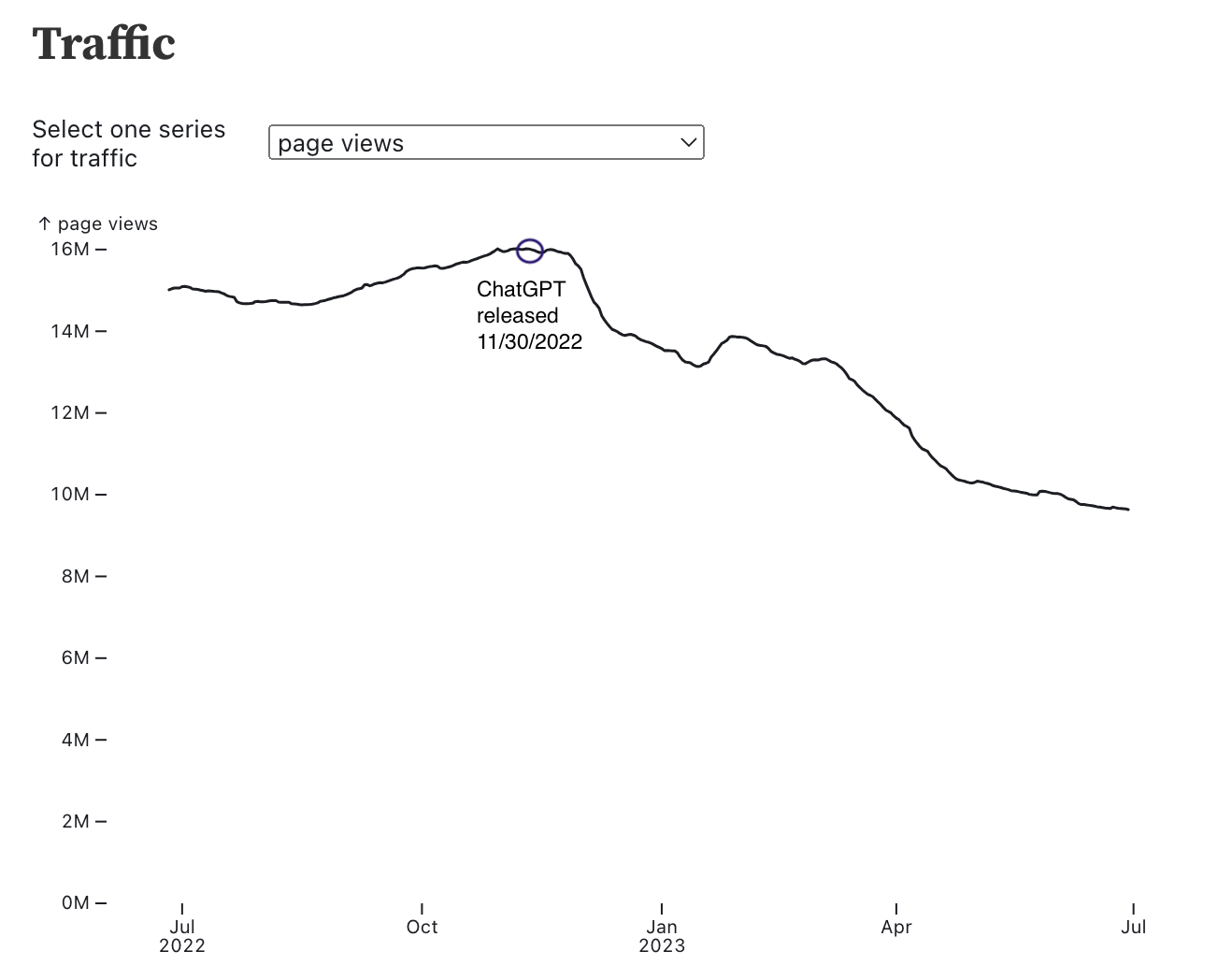

Online Information

Data visualization by Ayhan Fuat Çelik

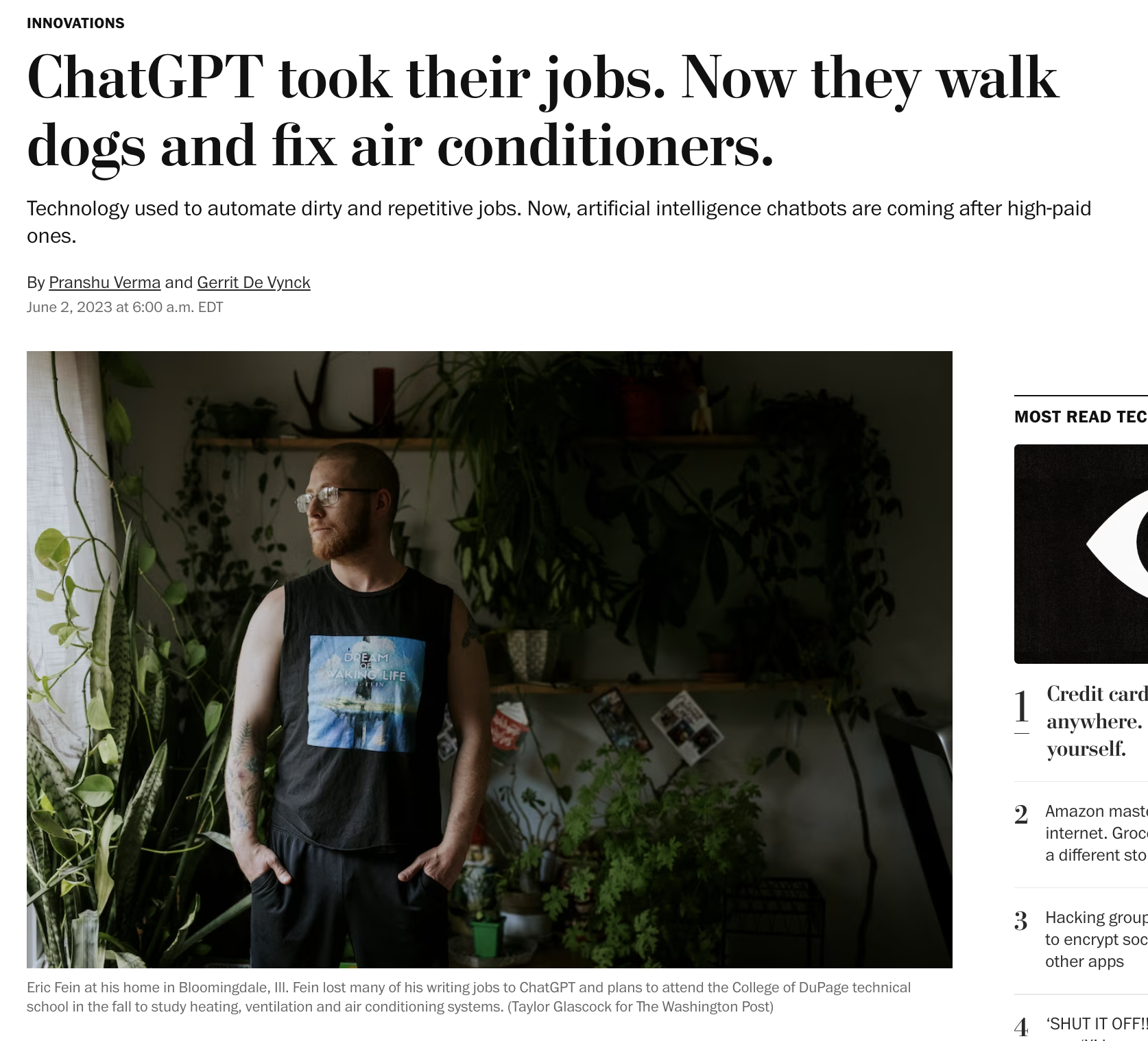

Labor Stability

Labor Stability

Labor Stability

ChatGPT needs to “drink” a 500ml bottle of [fresh] water for a simple conversation of roughly 20-50 questions and answers, depending on when and where ChatGPT is deployed.

Roughly 1B visitors interacted with with the ChatGPT website in July, for 7 mins on average.

If each user submitted one prompt, that’s roughly 350K-850K liters of water per day.

This is daily drinking water for roughly 100K-300K people.

Li et al, arXiv preprint (2023)

https://www.similarweb.com/website/chat.openai.com/#overview

We have a responsibility to take a critical perspective on tech.

Whose values? Whose benefits? Whose ideology? Whose identity?

Years of sociotechnical research show that advanced digital technologies, left unchecked, are used to pursue power and profit at the expense of human rights, social justice, and democracy.

Business Insider

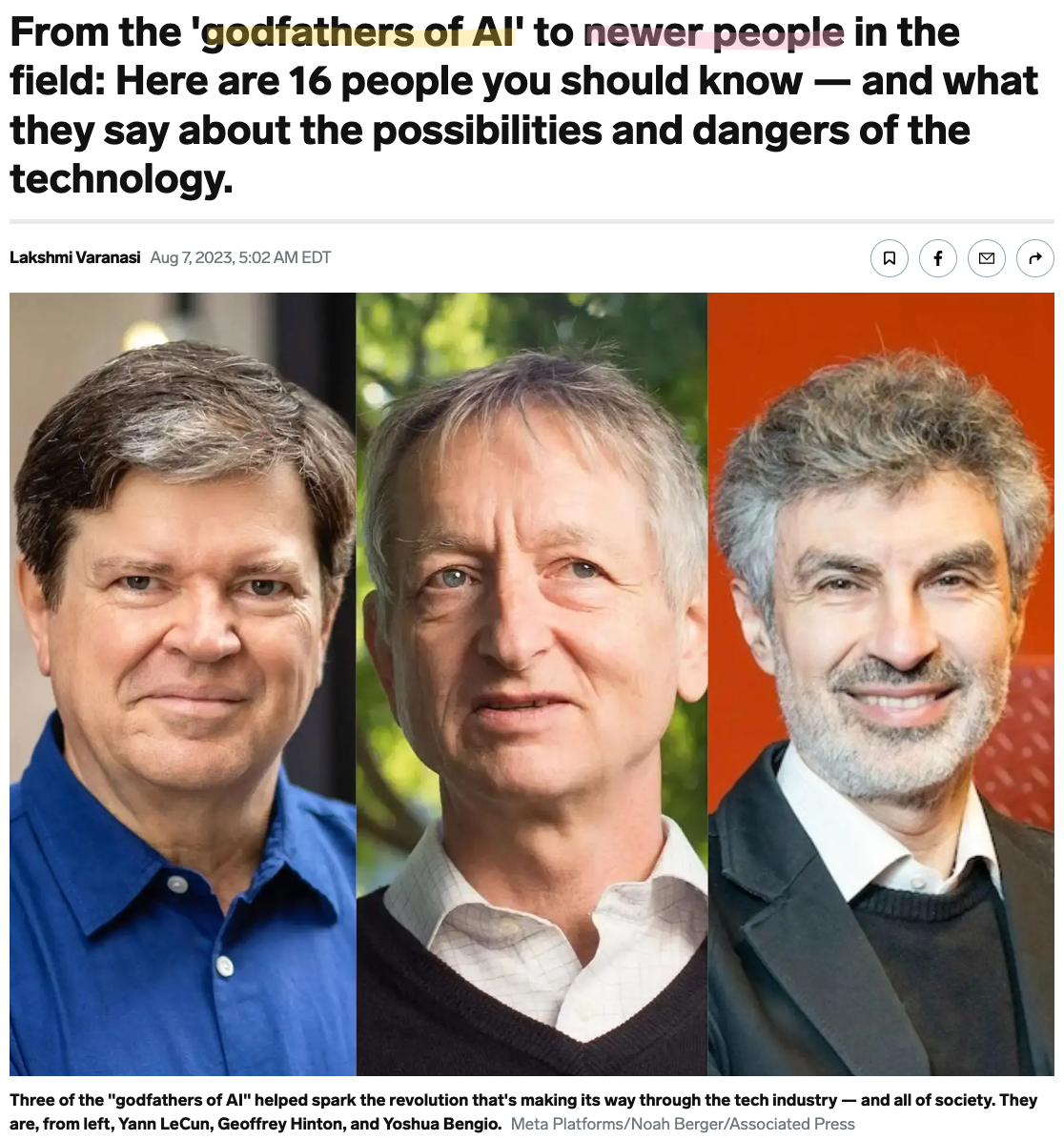

Critical Scholarship in AI

Many “newer people” are helping us ask fundamental questions:

- How do modern automated information systems differently impact people according to race, class, gender, and ability?

- What ideologies underly the push to develop and popularize these tools?

- What can we do?

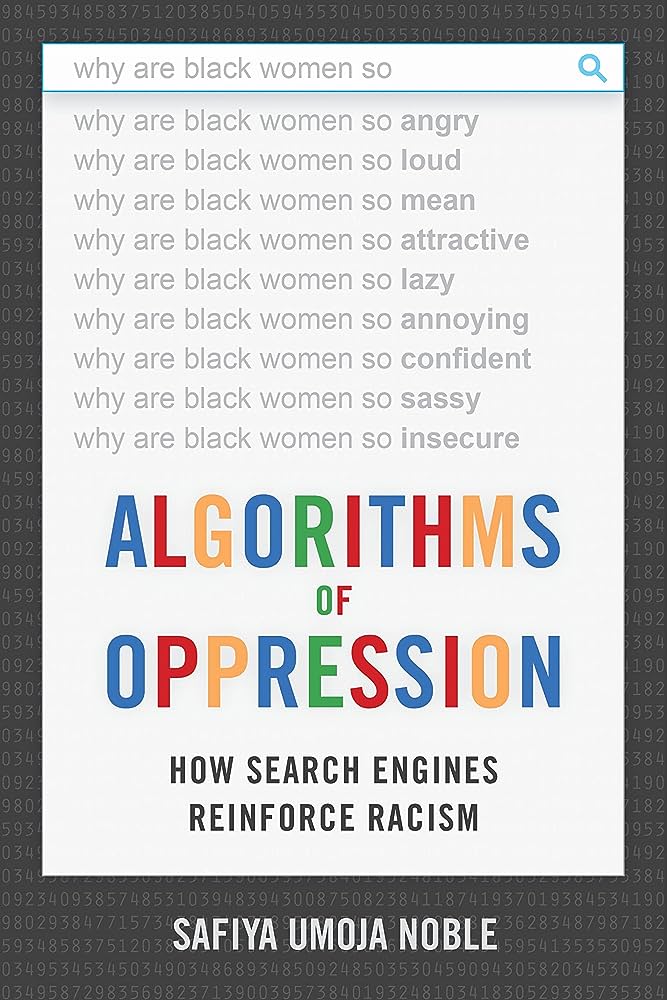

Critical Scholarship in AI

Modern automated information systems reproduce harmful representations of marginalized identities.

Safiya Umoja Noble

Critical Scholarship in AI

Ruha Benjamin

Modern automated information systems reinforce ideologies of white supremacy and colonialism.

Critical Scholarship in AI

Joy Buolamwini

Modern automated information systems serve and impact people of color (esp. women of color) in harsher ways.

Critical Scholarship in AI

Virginia Eubanks

Many modern automation systems are explicitly designed to control marginalized populations.

Critical Scholarship in AI

Timnit Gebru

Rhetoric from contemporary tech leaders continues intellectual lineages with roots in eugenics.

Towards a Critical View

Situation: Large language models (LLMs) are now powerful and widely available.

Why? Who benefits from the spread of artificial text generators?

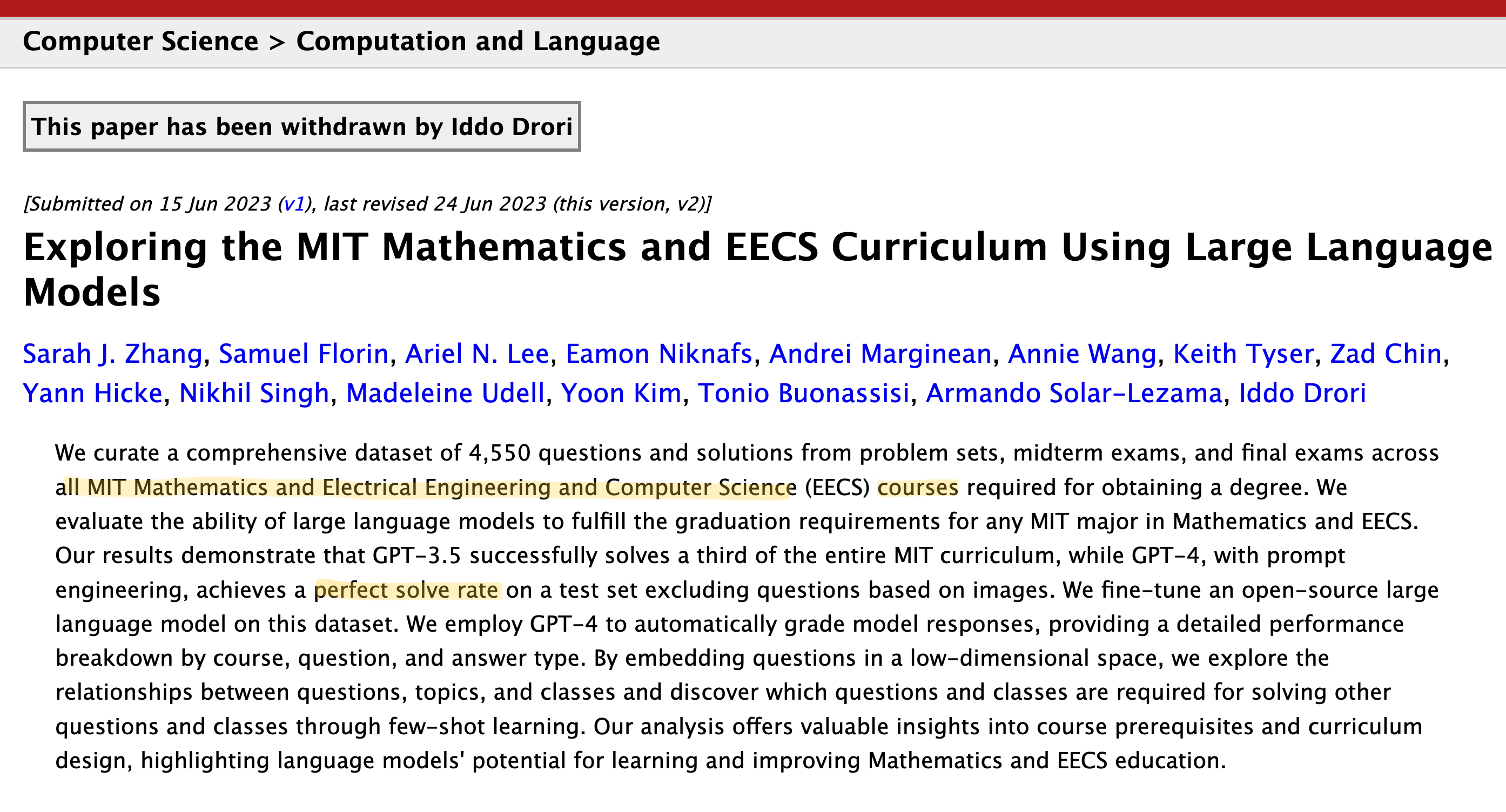

What information can I trust about the abilities of these models?

What is the actual social impact of LLMs? How does it compare to the rhetoric of motivated actors?

How can we help students cultivate critical perspectives on the role of technology in society and in their learning?

Thanks y’all!